Compute total number of electrons, spins. More...

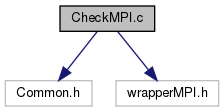

#include "Common.h"#include "wrapperMPI.h" Include dependency graph for CheckMPI.c:

Include dependency graph for CheckMPI.c:Go to the source code of this file.

Functions | |

| int | CheckMPI (struct BindStruct *X) |

| Define the number of sites in each PE (DefineList.Nsite). Reduce the number of electrons (DefineList.Ne), total Sz (DefineList.Total2Sz) by them in the inter process region. More... | |

| void | CheckMPI_Summary (struct BindStruct *X) |

| Print infomation of MPI parallelization Modify Definelist::Tpow in the inter process region. More... | |

Detailed Description

Compute total number of electrons, spins.

Definition in file CheckMPI.c.

Function Documentation

◆ CheckMPI()

| int CheckMPI | ( | struct BindStruct * | X | ) |

Define the number of sites in each PE (DefineList.Nsite). Reduce the number of electrons (DefineList.Ne), total Sz (DefineList.Total2Sz) by them in the inter process region.

Branch for each model

-

For Hubbard & Kondo Define local dimension DefineList::Nsite

-

For canonical Hubbard DefineList::Nup, DefineList::Ndown, and DefineList::Ne should be differerent in each PE.

-

For N-conserved canonical Hubbard DefineList::Ne should be differerent in each PE.

-

For canonical Kondo system DefineList::Nup, DefineList::Ndown, and DefineList::Ne should be differerent in each PE.

-

-

For 1/2 Spin system, define local dimension DefineList::Nsite

-

For general Spin system, define local dimension DefineList::Nsite

Check the number of processes for Boost

- Parameters

-

[in,out] X

Definition at line 27 of file CheckMPI.c.

References BindStruct::Boost, cErrNProcNumber, cErrNProcNumberGneralSpin, cErrNProcNumberHubbard, cErrNProcNumberSet, cErrNProcNumberSpin, BindStruct::Def, exitMPI(), FALSE, BoostList::flgBoost, DefineList::iCalcModel, DefineList::iFlgGeneralSpin, BoostList::ishift_nspin, ITINERANT, BoostList::list_6spin_star, DefineList::LocSpn, myrank, DefineList::Ndown, DefineList::Ne, DefineList::NLocSpn, nproc, DefineList::Nsite, DefineList::NsiteMPI, BoostList::num_pivot, DefineList::Nup, DefineList::SiteToBit, stdoutMPI, DefineList::Total2Sz, DefineList::Total2SzMPI, TRUE, and BoostList::W0.

Referenced by check().

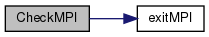

Here is the call graph for this function:

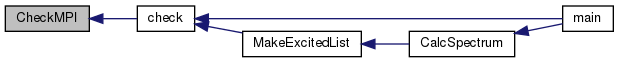

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ CheckMPI_Summary()

| void CheckMPI_Summary | ( | struct BindStruct * | X | ) |

Print infomation of MPI parallelization Modify Definelist::Tpow in the inter process region.

Print the configuration in the inter process region of each PE as a binary (excepting general spin) format.

Reset DefineList::Tpow[DefNsite], DefineList::Tpow[DefNsite + 1] ... as inter process space For Hubbard & Kondo system, define DefineList::OrgTpow which is not affected by the number of processes.

- Parameters

-

[in,out] X

Definition at line 310 of file CheckMPI.c.

References BindStruct::Check, BindStruct::Def, exitMPI(), FALSE, DefineList::iCalcModel, CheckList::idim_max, CheckList::idim_maxMPI, DefineList::iFlgGeneralSpin, DefineList::iFlgScaLAPACK, myrank, DefineList::Ndown, DefineList::Ne, nproc, DefineList::Nsite, DefineList::NsiteMPI, DefineList::Nup, DefineList::OrgTpow, DefineList::SiteToBit, stdoutMPI, SumMPI_i(), SumMPI_li(), DefineList::Total2Sz, and DefineList::Tpow.

Referenced by check().

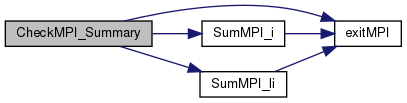

Here is the call graph for this function:

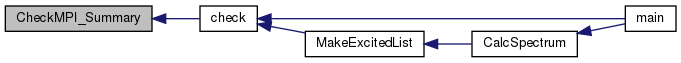

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function: