File for calculating total spin. More...

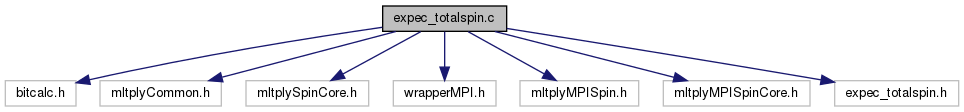

#include <bitcalc.h>#include "mltplyCommon.h"#include "mltplySpinCore.h"#include "wrapperMPI.h"#include "mltplyMPISpin.h"#include "mltplyMPISpinCore.h"#include "expec_totalspin.h" Include dependency graph for expec_totalspin.c:

Include dependency graph for expec_totalspin.c:Go to the source code of this file.

Functions | |

| int | expec_totalspin (struct BindStruct *X, double complex *vec) |

| Parent function of calculation of total spin. More... | |

| void | totalspin_Hubbard (struct BindStruct *X, double complex *vec) |

| function of calculating totalspin for Hubbard model More... | |

| void | totalspin_HubbardGC (struct BindStruct *X, double complex *vec) |

| function of calculating totalspin for Hubbard model in grand canonical ensemble More... | |

| void | totalspin_Spin (struct BindStruct *X, double complex *vec) |

| function of calculating totalspin for spin model More... | |

| void | totalspin_SpinGC (struct BindStruct *X, double complex *vec) |

| function of calculating totalspin for spin model in grand canonical ensemble More... | |

| int | expec_totalSz (struct BindStruct *X, double complex *vec) |

| void | totalSz_HubbardGC (struct BindStruct *X, double complex *vec) |

| function of calculating totalSz for Hubbard model in grand canonical ensemble More... | |

| void | totalSz_SpinGC (struct BindStruct *X, double complex *vec) |

| function of calculating totalSz for Spin model in grand canonical ensemble More... | |

Detailed Description

File for calculating total spin.

- Version

- 0.2

modify to treat the case of general spin

- Version

- 0.1

Definition in file expec_totalspin.c.

Function Documentation

◆ expec_totalspin()

| int expec_totalspin | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

Parent function of calculation of total spin.

- Parameters

-

[in,out] X data list of calculation parameters [in] vec eigenvector

- Return values

-

0 calculation is normally finished

Definition at line 50 of file expec_totalspin.c.

References BindStruct::Def, DefineList::iCalcModel, BindStruct::Large, LargeList::mode, BindStruct::Phys, PhysList::s2, PhysList::Sz, DefineList::Total2SzMPI, totalspin_Hubbard(), totalspin_HubbardGC(), totalspin_Spin(), and totalspin_SpinGC().

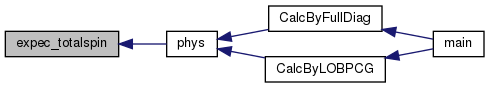

Referenced by phys().

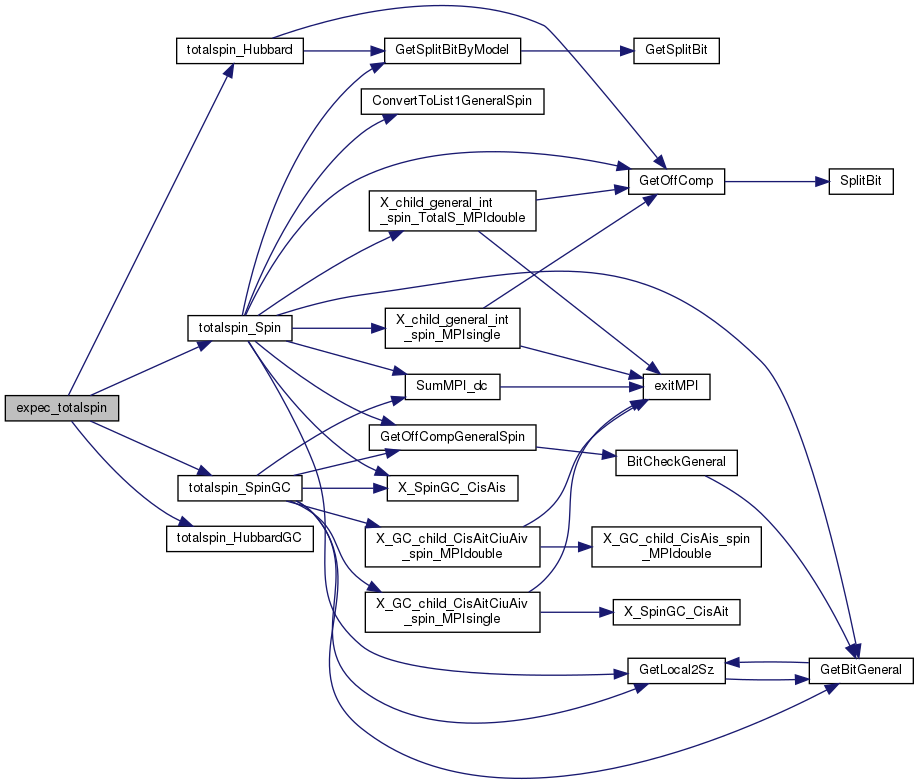

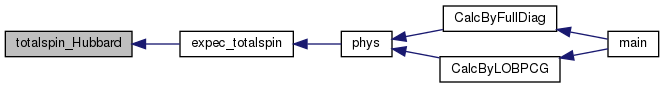

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ expec_totalSz()

| int expec_totalSz | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

Definition at line 649 of file expec_totalspin.c.

References BindStruct::Def, DefineList::iCalcModel, BindStruct::Large, LargeList::mode, BindStruct::Phys, PhysList::Sz, DefineList::Total2SzMPI, totalSz_HubbardGC(), and totalSz_SpinGC().

Referenced by CalcByLanczos().

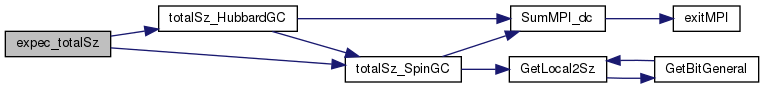

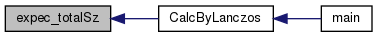

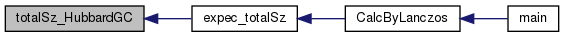

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalspin_Hubbard()

| void totalspin_Hubbard | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalspin for Hubbard model

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.1

Definition at line 88 of file expec_totalspin.c.

References BindStruct::Check, BindStruct::Def, GetOffComp(), GetSplitBitByModel(), DefineList::iCalcModel, CheckList::idim_max, list_1, list_2_1, list_2_2, DefineList::Nsite, DefineList::NsiteMPI, BindStruct::Phys, PhysList::s2, PhysList::Sz, and DefineList::Tpow.

Referenced by expec_totalspin().

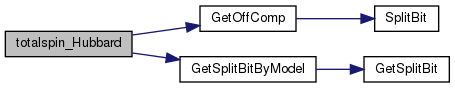

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalspin_HubbardGC()

| void totalspin_HubbardGC | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalspin for Hubbard model in grand canonical ensemble

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.1

Definition at line 161 of file expec_totalspin.c.

References BindStruct::Check, BindStruct::Def, CheckList::idim_max, DefineList::NsiteMPI, BindStruct::Phys, PhysList::s2, PhysList::Sz, and DefineList::Tpow.

Referenced by expec_totalspin().

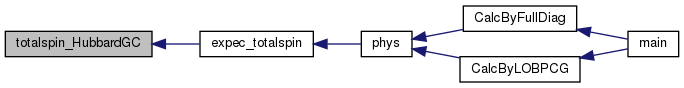

Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalspin_Spin()

| void totalspin_Spin | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalspin for spin model

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.2

modify for hybrid parallel

- Version

- 0.1

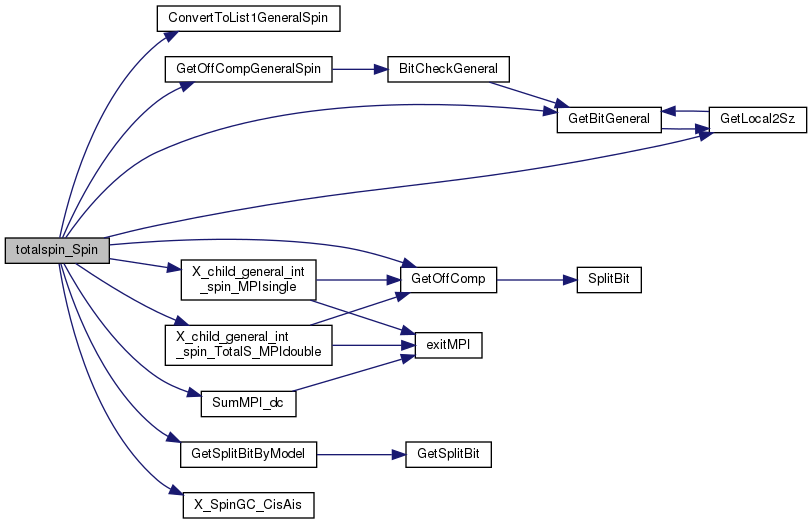

Definition at line 233 of file expec_totalspin.c.

References BindStruct::Check, ConvertToList1GeneralSpin(), BindStruct::Def, FALSE, GetBitGeneral(), GetLocal2Sz(), GetOffComp(), GetOffCompGeneralSpin(), GetSplitBitByModel(), DefineList::iCalcModel, CheckList::idim_max, DefineList::iFlgGeneralSpin, list_1, list_2_1, list_2_2, myrank, DefineList::Nsite, DefineList::NsiteMPI, BindStruct::Phys, PhysList::s2, CheckList::sdim, DefineList::SiteToBit, SumMPI_dc(), PhysList::Sz, DefineList::Tpow, TRUE, X_child_general_int_spin_MPIsingle(), X_child_general_int_spin_TotalS_MPIdouble(), and X_SpinGC_CisAis().

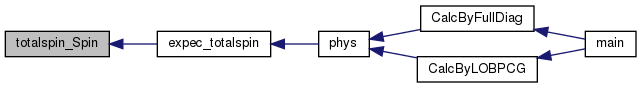

Referenced by expec_totalspin().

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalspin_SpinGC()

| void totalspin_SpinGC | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalspin for spin model in grand canonical ensemble

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.2

add function to treat a calculation of total spin for general spin

- Version

- 0.1

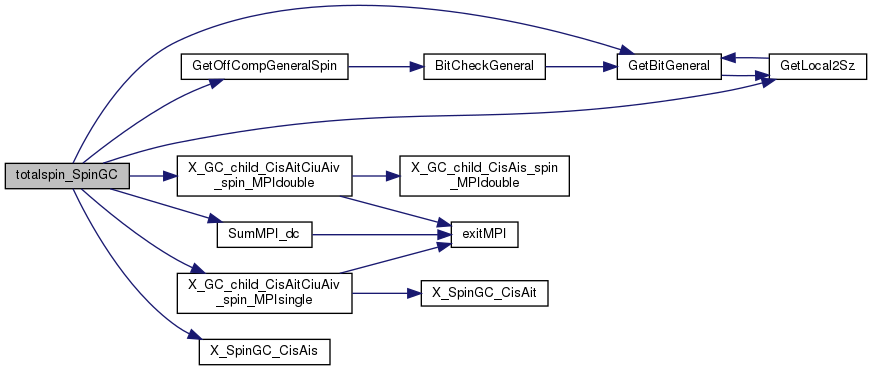

Definition at line 424 of file expec_totalspin.c.

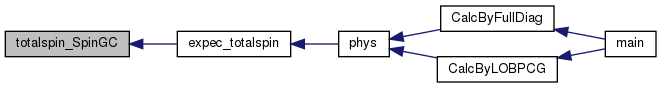

References BindStruct::Check, BindStruct::Def, FALSE, GetBitGeneral(), GetLocal2Sz(), GetOffCompGeneralSpin(), CheckList::idim_max, DefineList::iFlgGeneralSpin, BindStruct::Large, LargeList::mode, myrank, DefineList::Nsite, DefineList::NsiteMPI, BindStruct::Phys, PhysList::s2, DefineList::SiteToBit, SumMPI_dc(), PhysList::Sz, DefineList::Tpow, X_GC_child_CisAitCiuAiv_spin_MPIdouble(), X_GC_child_CisAitCiuAiv_spin_MPIsingle(), and X_SpinGC_CisAis().

Referenced by expec_totalspin().

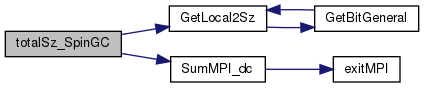

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalSz_HubbardGC()

| void totalSz_HubbardGC | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalSz for Hubbard model in grand canonical ensemble

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.1

Definition at line 688 of file expec_totalspin.c.

References BindStruct::Check, BindStruct::Def, CheckList::idim_max, myrank, DefineList::Nsite, DefineList::NsiteMPI, BindStruct::Phys, SumMPI_dc(), PhysList::Sz, totalSz_SpinGC(), and DefineList::Tpow.

Referenced by expec_totalSz().

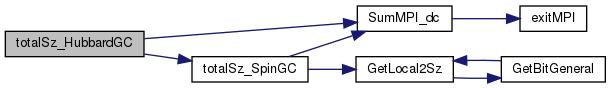

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function:◆ totalSz_SpinGC()

| void totalSz_SpinGC | ( | struct BindStruct * | X, |

| double complex * | vec | ||

| ) |

function of calculating totalSz for Spin model in grand canonical ensemble

- Parameters

-

[in,out] X data list of calculation parameters vec eigenvector

- Version

- 0.1

Definition at line 747 of file expec_totalspin.c.

References BindStruct::Check, BindStruct::Def, FALSE, GetLocal2Sz(), CheckList::idim_max, DefineList::iFlgGeneralSpin, BindStruct::Large, LargeList::mode, myrank, DefineList::Nsite, DefineList::NsiteMPI, BindStruct::Phys, DefineList::SiteToBit, SumMPI_dc(), PhysList::Sz, and DefineList::Tpow.

Referenced by expec_totalSz(), and totalSz_HubbardGC().

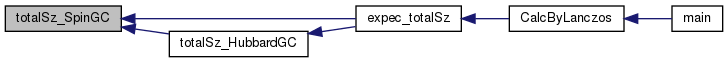

Here is the call graph for this function:

Here is the call graph for this function: Here is the caller graph for this function:

Here is the caller graph for this function: